Policy & Funding

Short-Term Impact of $3 Billion School Improvement Grants: Zilch; Long-Term Impact: TBD

- By Dian Schaffhauser

- 03/13/17

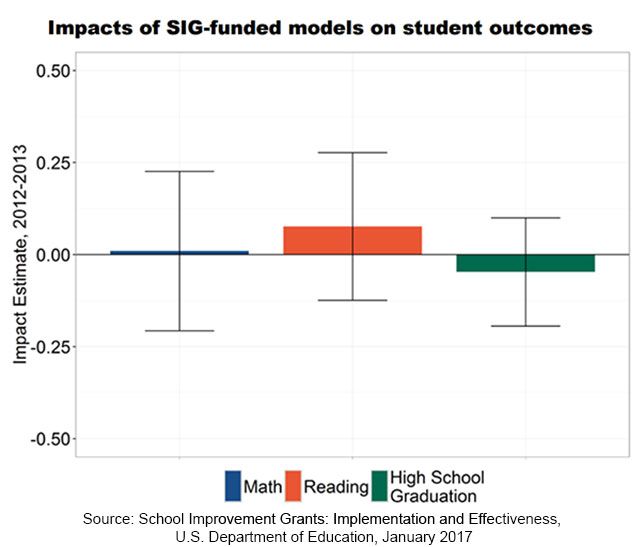

A hefty study commissioned by the Institute of Education Sciences (IES) and written by researchers at Mathematica has examined the impact of School Improvement Grants (SIGs) and found that implementing a SIG-funded model "had no impact on math or reading test scores, high school graduation or college enrollment."

As "School Improvement Grants: Implementation and Effectiveness" stated, SIGs were a signature $3 billion program delivered during the Obama administration as part of its American Recovery and Reinvestment Act of 2009. The idea was to award grants to states that agreed to implement one of four intervention models — transformation, turnaround, restart or closure — in their lowest-performing schools. Each model "prescribed specific practices" that were designed to improve student learning, including outcomes for high-need students.

With the implementation of Every Student Succeeds Act, that best-practices approach (with its guard rails) no longer shows up in the SIG program. Now states and districts have a great deal more flexibility in how they attempt to turn around their lowest-achieving schools. But before institutional memory fades regarding that initial SIG investment, the institute wanted to find out the answers to four basic questions:

- Did schools implementing a SIG-funded model use the improvement practices pushed by the SIGs?

- Did those practices include a focus on English language learners (ELLs), and did any focus differ between those schools implementing a SIG-funded model and those that didn't?

- Did the funding have any impact on outcomes for low-performing schools?

- Did the specific intervention model implemented have any impact on outcomes?

This isn't the first time SIGs have undergone evaluations. Three previous reports examined SIG implementation practices and states' capabilities in supporting school turnaround. But this fourth report builds on those earlier research efforts and adds an additional year of data. It also adds the overall question regarding efficacy of SIGs on student outcomes.

The improvement practices that made up the four intervention models focused on four areas:

- Adopting comprehensive instructional reform strategies, such as the use of data in implementing instructional programs;

- Developing and increasing teacher and principal effectiveness, such as using "rigorous, transparent and equitable evaluation systems";

- Increasing learning time and creating "community-oriented" schools that engage families and meet "students' social, emotional and health needs"; and

- Having operational flexibility in hiring, discipline and budget; and receiving technical assistance and support.

The research team looked at the SIG-promoted practices in two ways: It conducted a descriptive analysis that compared the use of the practices for 290 schools under SIG-funded models in 2012-2013 and 190 schools that weren't under those models; and it analyzed data from 460 schools to figure out whether implementation of a SIG-funded model in 2010-2011 had any impact on the use of the practices.

To sort out whether the SIG program had any impact on student outcomes, the researchers analyzed data to measure impact on test scores, graduation rates and college enrollment in a sample of 190 schools eligible for SIG and 270 schools that weren't.

They also undertook an examination of the various intervention models themselves to figure out whether student achievement varied by model. In this, the researchers tapped data from a sampling of 270 schools implementing a SIG model.

The project whittled down its conclusions covered in the 419-page report to five key findings:

- Although schools implementing SIG-funded models reported using more SIG-promoted practices than other schools, there was no statistically significant evidence that SIG caused those schools to implement more practices.

- Across all study schools, use of SIG-promoted practices was highest in comprehensive instructional reform strategies (89 percent or 7.1 of eight SIG-promoted practices) and lowest in operational flexibility and support (43 percent or 0.87 of the two promoted practices).

- There were no significant differences in the use of ELL-focused practices between schools running a SIG-funded model and those that didn't.

- Overall, across all grades, the implementation of any of the SIG-funded models had "no significant impacts on math or reading test scores, high school graduation or college enrollment."

- In grades 2 through 5, there was no evidence of one model being more effective at generating higher learning gains than any other model. In higher grades (6 through 12), the turnaround model saw larger student gains in math than the transformation model; however, the report noted, other factors may explain the variances as well, such as "baseline differences between schools implementing different models."

In her coverage of the report for the Thomas Fordham Institute Research Intern Lauren Gonnelli pointed to two disparate reactions to the findings.

First, there was Andy Smarick's response. Smarick, a resident fellow at the conservative think-tank American Enterprise Institute, called the results, "devastating to Arne Duncan's and the Obama administration's education legacy." As he declared, "The program failed spectacularly."

Morgan Polikoff, an associate professor of education at the University of Southern California's Rossier School of Education, was more circumspect. His take: When it comes to policy evaluation, judgment based on early evaluation can turn out to be "misleading." As he noted in an article on C-SAIL, a website supported by IES, although the impact evaluation of the SIG initiative was "neutral, several studies have found positive effects and many have found impacts that grow as the years progress (suggesting that longer-term evaluations may yet show effects)." In other words, sustained improvements such as the ones prompted by the school improvement grants could take a long time to prove their impact. His suggestion: Don't declare failure too soon.

The full report is freely available on the Mathematica Policy Research site.

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.