Rubrik, Okta Partner on AI-Driven Identity Threat Protection

Cybersecurity companies Rubrik and Okta are partnering to offer a new AI-powered identity threat protection solution.

Specifically, Rubrik Security Cloud will integrate with Identity Threat Protection with Okta AI to provide critical user context to accelerate threat detection and response.

Rubrik will provide Okta with key user information, including e-mail addresses and details about the sensitive files they've accessed. By merging Rubrik's user access risk signals with threat data from other security tools (like Endpoint Detection and Response or EDR), Okta can better assess overall risk and automate responses to counter identity-based threats.

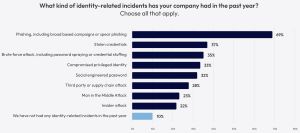

Identity-related cybersecurity threats are on the rise, according to 2024 research from vendor-neutral Identity Defined Security Alliance (IDSA) showing they come in all shapes and sizes.

[Click on image for larger view.] Identity-Related Incidents (source: Identity Defined Security Alliance).

[Click on image for larger view.] Identity-Related Incidents (source: Identity Defined Security Alliance).

"When Okta Identity Threat Protection combines Rubrik's user risk signals with other security signals, Okta can accurately determine overall risk levels and automate threat response accordingly," Rubrik said in a blog post. "For example, it can take actions on a high-risk user such as logging them out of a certain device or requiring re-authentication. These remediation steps help mitigate potential threats by revoking access or prompting additional verification when suspicious activity is detected. Upon learning about user risk changes, Okta can take an action on potential threats, reducing the operational burden on security teams."

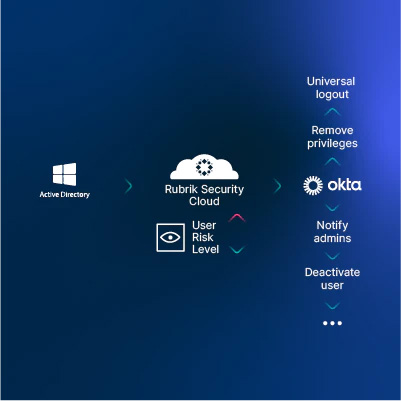

The diagram below, showing a high-level overview of how the integration works, indicates Microsoft's Active Directory also figures into things with this three-step process:

- Rubrik knows the user's identity based on information from Microsoft Active Directory

- Rubrik Security Cloud assigns the user a risk level based on the sensitivity of the data they can access.

- When Rubrik detects a change in a user's risk level, it shares this with Okta Identity Threat Protection, which can then take a response action.

High-Level View of Integration (source: Rubrik).

High-Level View of Integration (source: Rubrik).

"Rubrik is the first data security platform of its kind to build an integration with Identity Threat Protection with Okta AI, to help you proactively detect changes in your users' sensitive data access risk levels and automate remediation," the companies explained in an Okta partner site set up by Rubrik with the title, "Mitigate identity-based threats with user intelligence." The Okta integration is "coming soon."

About the Author

David Ramel is an editor and writer for Converge360.