Report: AI Security Controls Lag Behind Adoption of AI Cloud Services

According to a recent report from cybersecurity firm Wiz, nearly nine out of 10 organizations are already using AI services in the cloud — but fewer than one in seven have implemented AI-specific security controls. The company surveyed 100 cloud professionals — including architects, engineers, directors, and C-level leaders — spanning 96 organizations across multiple industries, finding that security teams face a critical skills and tooling gap that could undermine enterprise AI initiatives, particularly as shadow AI continues to proliferate and hybrid cloud architectures become more complex.

AI Adoption Outpaces Security Expertise

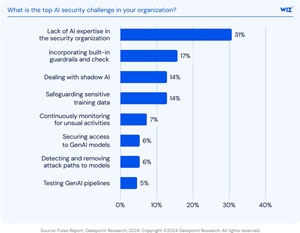

According to the report, AI Security Readiness: Insights from 100 Cloud Architects, Engineers, and Security Leaders, 87% of organizations are already using AI services, such as OpenAI or Amazon Bedrock. But 31% of respondents identified a lack of AI security expertise as their top concern — making it the most commonly cited challenge.

[Click on image for larger view.] What is the top AI security challenge in your organization? (source: Wiz/Gatepoint).

[Click on image for larger view.] What is the top AI security challenge in your organization? (source: Wiz/Gatepoint).

"Security teams are being asked to protect systems they may not fully understand," the report noted, "and this expertise gap creates a growing risk surface." Tooling and automation are described as "critical" until that skills gap is addressed.

Traditional Controls Still Dominate

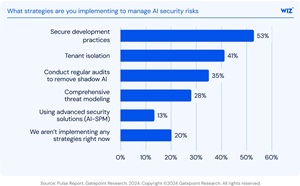

Only 13% of organizations currently use AI-specific security posture management (AI-SPM) tools. Instead, most rely on traditional controls more suited to legacy environments:

- Secure development practices: 53%

- Tenant isolation: 41%

- Audits to identify shadow AI: 35%

[Click on image for larger view.] What strategies are you implementing to manage AI security risks (source: Wiz/Gatepoint).

[Click on image for larger view.] What strategies are you implementing to manage AI security risks (source: Wiz/Gatepoint).

While these remain important, the report emphasized that they are not designed to address the unique risks of AI systems, including lateral model access, poisoned training data, and unmonitored use of generative APIs.

Cloud Complexity Increases Risk, Reduces Visibility

Hybrid and multi-cloud deployments are the norm, with 45% of organizations operating in hybrid environments and 33% in multi-cloud. Yet 70% of respondents still rely on endpoint detection and response (EDR) — a toolset built for centralized architectures.

The following table summarizes cloud usage among surveyed organizations:

| Architecture |

Percentage |

| Hybrid Cloud |

45% |

| Multi-Cloud |

33% |

| Single Cloud |

22% |

Meanwhile, 25% of respondents admitted they don't know what AI services are currently running in their environment.

Security Needs Go Beyond Technology

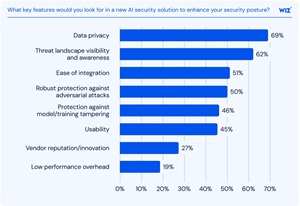

The most desired features in AI security tools reflect broader operational and workflow concerns. According to the survey:

- 69% prioritized data privacy

- 62% cited threat visibility

- 51% called for ease of integration

[Click on image for larger view.] What AI services and technologies are currently running in your environment? (source: Wiz).

[Click on image for larger view.] What AI services and technologies are currently running in your environment? (source: Wiz).

The report cautioned that difficulty integrating with DevOps workflows is a major barrier to adoption. Decentralized experimentation also creates blind spots that traditional security models can't address.

Security Maturity Model for AI

Wiz mapped AI readiness onto its broader Cloud Security Maturity Framework, describing four stages of AI security maturity that align with five phases of cloud security development:

| Phase |

Maturity Stage |

Description |

| 1 |

Experimental AI |

High-risk use of AI with limited visibility and shadow deployments |

| 2 |

Early Governance |

Basic controls in place, but AI-specific risks not well managed |

| 3 |

AI-Integrated Security |

Embedded controls, AI-SPM tools in use, improved governance |

| 4 |

Proactive AI SecOps |

Automation and real-time response to AI risks across environments |

[Click on image for larger view.] Cloud Security Maturity Framework (source: Wiz).

[Click on image for larger view.] Cloud Security Maturity Framework (source: Wiz).

Most organizations, the report said, remain in phases 1 or 2.

Recommendations to Close the Gap

To move forward, the report outlined key actions for IT and security teams:

- Adopt tools for continuous discovery of AI models and shadow services

- Shift security left into earlier stages of the SDLC

- Ensure policies follow workloads across multi-cloud and hybrid environments

- Provide AI-specific training for security professionals

"Security can't be reactive," the report concluded. "It must be continuous and proactive."

For the full report, go to the Wiz site (registration required).